Potential G2-item cycles under serializable isolation

| From: | Kyle Kingsbury <aphyr(at)jepsen(dot)io> |

|---|---|

| To: | pgsql-bugs(at)lists(dot)postgresql(dot)org |

| Subject: | Potential G2-item cycles under serializable isolation |

| Date: | 2020-06-01 02:24:57 |

| Message-ID: | db7b729d-0226-d162-a126-8a8ab2dc4443@jepsen.io |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-bugs |

Hello everyone!

First off, I'm sorry for *gestures vaguely* all of this. Second, I think I may

have found a serializability violation in Postgres 12.3, involving

anti-dependency cycles around row insertion.

For background, my name is Kyle Kingsbury, and I test distributed database

safety properties (https://jepsen.io) I started looking at Stolon + PostgreSQL

this week, encountered this behavior, and managed to narrow it down to a single

Postgres node without Stolon at all. Normally I test with a variety of faults

(network partitions, crashes, etc.), but this behavior occurs in healthy

processes without any faults.

This test uses the Jepsen testing library (https://github.com/jepsen-io/jepsen)

and the Elle isolation checker (https://github.com/jepsen-io/elle) If you're

wondering "why would you ever do transactions like this", the Elle paper might

provide some helpful background: https://arxiv.org/abs/2003.10554. We install

Postgres 12.3-1.pgdg100+1 on a Debian 10 node, using the official Postgres

repository at http://apt.postgresql.org/pub/repos/apt/. Each client uses its own

JDBC connection, on a single thread, in a single JVM process. We use the JDBC

postgres driver (org.postgresql/postgresql 42.2.12). The JDK is 1.8.0_40-b25.

Logically, the test performs randomly generated transactions over a set of lists

identified by integer keys. Each operation is either a read, which returns the

current value of the list for a given key, or an append, which adds a unique

element to the end of the list for a given key. In Postgres, we store these

objects in tables like so:

create table if not exists txn0 (id int not null primary key,

sk int not null,

val text)

id is the key, and text stores comma-separated elements. sk is a secondary key,

which is unused here. We create three tables like this (txn0, txn1, txn2).

Records are striped across tables by hashing their key.

We set the session transaction isolation level immediately after opening every

connection:

SET SESSION CHARACTERISTICS AS TRANSACTION ISOLATION LEVEL SERIALIZABLE

... and request SERIALIZABLE for each JDBC transaction as well.

Our reads are of the form:

select (val) from txn0 where id = $1

And our writes are of the form:

insert into txn1 as t (id, sk, val) values ($1, $2, $3) on conflict (id) do

update set val = CONCAT(t.val, ',', $4) where t.id = $5

where $1 and $5 are the key, and $2, $3, and $4 are the element we'd like to

append to the list.

You can try these transactions for yourself using Jepsen f47eb25. You'll need a

Jepsen environment; see

https://github.com/jepsen-io/jepsen#setting-up-a-jepsen-environment for details.

cd jepsen/stolon

lein run test-all -w append --nemesis none --max-writes-per-key 8 --node n1

--just-postgres --concurrency 50 -r 1000

Which typically produces, after about a minute, anomalies like the following:

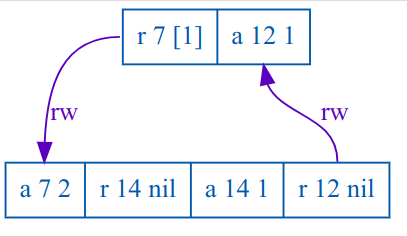

G2-item #1

Let:

T1 = {:type :ok, :f :txn, :value [[:r 7 [1]] [:append 12 1]], :time 95024280,

:process 5, :index 50}

T2 = {:type :ok, :f :txn, :value [[:append 7 2] [:r 14 nil] [:append 14 1]

[:r 12 nil]], :time 98700211, :process 6, :index 70}

Then:

- T1 < T2, because T1 did not observe T2's append of 2 to 7.

- However, T2 < T1, because T2 observed the initial (nil) state of 12, which

T1 created by appending 1: a contradiction!

A dependency graph of this anomaly is attached to this email: lines marked `rw`

indicate read-write anti-dependencies between specific operations in each

transaction. Because there are multiple rw edges in this graph, it suggests the

presence of G2-item. It is also possible, of course, that worse anomalies

happened (e.g. aborted read) which caused us to incorrectly infer this causal

graph, but I suspect this is not the case.

You can find a full copy of this particular test run, including a history of

every transaction, Postgres logs, and a pcap file containing all client-server

interactions, at

http://jepsen.io.s3.amazonaws.com/analyses/postgresql-12.3/20200531T215019.000-0400.zip

If you'd like to look at the test code, see

https://github.com/jepsen-io/jepsen/tree/f47eb25ab32529a7b66f1dfdd3b5ef2fc84ed778/stolon/src/jepsen.

Specifically, setup code is here:

... and the workload responsible for constructing and submitting transactions is

here:

These anomalies appear limited to G2-item: I haven't seen G-single (read skew),

cyclic information flow, aborted reads, dirty writes, etc. It also looks as if

every anomaly involves a *nil* read, which suggests (and I know the bug report

guidelines say not to speculate, but experience suggests this might be helpful)

that there is something special about row insertion. In TiDB, for instance, we

found that G2-item anomalies with `select ... for update` was linked to the fact

that TiDB's lock manager couldn't lock keys which hadn't been created yet

(https://jepsen.io/analyses/tidb-2.1.7#select-for-update) I don't know anything

about Postgres' internals, but I hope this is of some use!

Is this... known behavior? Unexpected? Are there configuration flags or client

settings I should double-check? I know this is all a bit much, so I'm happy to

answer any questions you might have. :-)

Sincerely,

--Kyle

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 12.2 KB |

Responses

- Re: Potential G2-item cycles under serializable isolation at 2020-06-01 03:04:14 from Peter Geoghegan

- Re: Potential G2-item cycles under serializable isolation at 2020-06-04 02:15:24 from Peter Geoghegan

Browse pgsql-bugs by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Peter Geoghegan | 2020-06-01 03:04:14 | Re: Potential G2-item cycles under serializable isolation |

| Previous Message | Michael Paquier | 2020-05-31 01:51:34 | Re: Re[2]: |