Re: Built-in connection pooler

| From: | Ryan Lambert <ryan(at)rustprooflabs(dot)com> |

|---|---|

| To: | Konstantin Knizhnik <k(dot)knizhnik(at)postgrespro(dot)ru> |

| Cc: | Thomas Munro <thomas(dot)munro(at)gmail(dot)com>, PostgreSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Bruce Momjian <bruce(at)momjian(dot)us>, Dimitri Fontaine <dim(at)tapoueh(dot)org> |

| Subject: | Re: Built-in connection pooler |

| Date: | 2019-07-24 21:58:27 |

| Message-ID: | CAN-V+g99RvCr5GCRkn_H6k2Mom76HcAqFrfzQxuFNEa-Hip5Mw@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

Hello Konstantin,

> Concerning testing: I do not think that connection pooler needs some kind

of special tests.

> The idea of built-in connection pooler is that it should be able to

handle all requests normal postgres can do.

> I have added to regression tests extra path with enabled connection

proxies.

> Unfortunately, pg_regress is altering some session variables, so it

backend becomes tainted and so

> pooling is not actually used (but communication through proxy is tested).

> Thank you for your work on this patch, I took some good time to really

explore the configuration and do some testing with pgbench. This round of

testing was done against patch 10 [1] and master branch commit a0555ddab9

from 7/22.

Thank you for explaining, I wasn't sure.

make installcheck-world: tested, passed

Implements feature: tested, passed

Documentation: I need to review again, I saw typos when testing but didn't

make note of the details.

Applying the patch [1] has improved from v9, still getting these:

git apply -p1 < builtin_connection_proxy-10.patch

<stdin>:1536: indent with spaces.

/* Has pending clients: serve one of them */

<stdin>:1936: indent with spaces.

/* If we attach new client to the existed backend,

then we need to send handshake response to the client */

<stdin>:2208: indent with spaces.

if (port->sock == PGINVALID_SOCKET)

<stdin>:2416: indent with spaces.

FuncCallContext* srf_ctx;

<stdin>:2429: indent with spaces.

ps_ctx = (PoolerStateContext*)palloc(sizeof(PoolerStateContext));

warning: squelched 5 whitespace errors

warning: 10 lines add whitespace errors.

I used a DigitalOcean droplet with 2 CPU and 2 GB RAM and SSD for this

testing, Ubuntu 18.04. I chose the smaller server size based on the

availability of similar and recent results around connection pooling [2]

that used AWS EC2 m4.large instance (2 cores, 8 GB RAM) and pgbouncer.

Your prior pgbench tests [3] also focused on larger servers so I wanted to

see how this works on smaller hardware.

Considering this from connpool.sgml:

"<varname>connection_proxies</varname> specifies number of connection proxy

processes which will be spawned. Default value is zero, so connection

pooling is disabled by default."

That hints to me that connection_proxies is the main configuration to start

with so that was the only configuration I changed from the default for this

feature. I adjusted shared_buffers to 500MB (25% of total) and

max_connections to 1000. Only having one proxy gives subpar performance

across the board, so did setting this value to 10. My hunch is this value

should roughly follow the # of cpus available, but that's just a hunch.

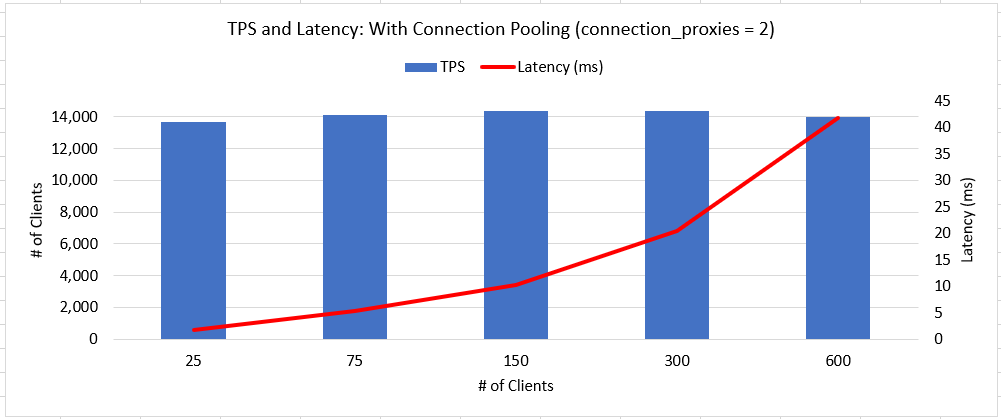

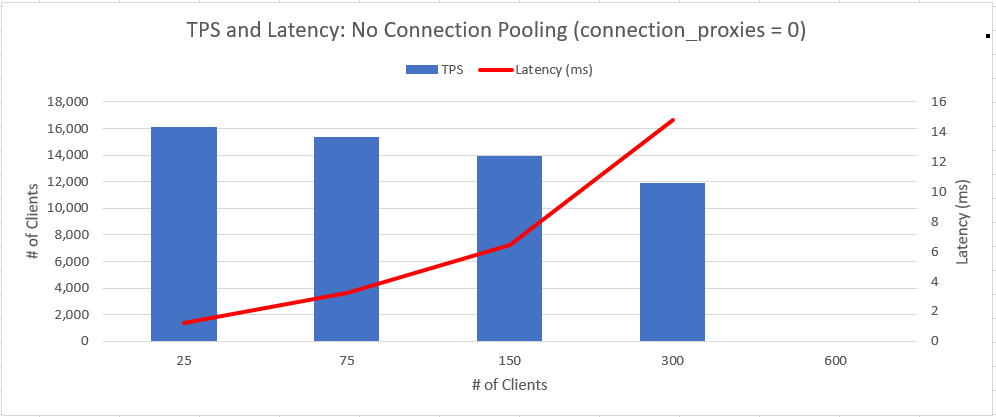

I tested with 25, 75, 150, 300 and 600 connections. Initialized with a

scale of 1000 and ran read-only tests. Basic pgbench commands look like

the following, I have full commands and results from 18 tests included in

the attached MD file. Postgres was restarted between each test.

pgbench -i -s 1000 bench_test

pgbench -p 6543 -c 300 -j 2 -T 600 -P 60 -S bench_test

Tests were all ran from the same server. I intend to do further testing

with external connections using SSL.

General observations:

For each value of connection_proxies, the TPS observed at 25 connections

held up reliably through 600 connections. For this server using

connection_proxies = 2 was the fastest, but setting to 1 or 10 still

provided **predictable** throughput. That seems to be a good attribute for

this feature.

Also predictable was the latency increase, doubling the connections roughly

doubles the latency. This was true with or without connection pooling.

Focusing on disabled connection pooling vs the feature with two proxies,

the results are promising, the breakpoint seems to be around 150

connections.

Low connections (25): -15% TPS; +45% latency

Medium connections (300): +21% TPS; +38% latency

High connections (600): Couldn't run without pooling... aka: Win for

pooling!

The two attached charts show the TPS and average latency of these two

scenarios. This feature does a great job of maintaining a consistent TPS

as connection numbers increase. This comes with tradeoffs of lower

throughput with < 150 connections, and higher latency across the board.

The increase in latency seems reasonable to me based on the testing I have

done so far. Compared to the results from [2] it seems latency is affecting

this feature a bit more than it does pgbouncer, yet not unreasonably so

given the benefit of having the feature built in and the reduced complexity.

I don't understand yet how max_sessions ties in.

Also, having both session_pool_size and connection_proxies seemed confusing

at first. I still haven't figured out exactly how they relate together in

the overall operation and their impact on performance. The new view

helped, I get the concept of **what** it is doing (connection_proxies =

more rows, session_pool_size = n_backends for each row), it's more a lack

of understanding the **why** regarding how it will operate.

postgres=# select * from pg_pooler_state();

pid | n_clients | n_ssl_clients | n_pools | n_backends |

n_dedicated_backends | tx_bytes | rx_bytes | n_transactions

------+-----------+---------------+---------+------------+----------------------+-----------+-----------+----------------

1682 | 75 | 0 | 1 | 10 |

0 | 366810458 | 353181393 | 5557109

1683 | 75 | 0 | 1 | 10 |

0 | 368464689 | 354778709 | 5582174

(2 rows

I am not sure how I feel about this:

"Non-tainted backends are not terminated even if there are no more

connected sessions."

Would it be possible (eventually) to monitor connection rates and free up

non-tainted backends after a time? The way I'd like to think of that

working would be:

If 50% of backends are unused for more than 1 hour, release 10% of

established backends.

The two percentages and time frame would ideally be configurable, but setup

in a way that it doesn't let go of connections too quickly, causing

unnecessary expense of re-establishing those connections. My thought is if

there's one big surge of connections followed by a long period of lower

connections, does it make sense to keep those extra backends established?

I'll give the documentation another pass soon. Thanks for all your work on

this, I like what I'm seeing so far!

[1]

https://www.postgresql.org/message-id/attachment/102732/builtin_connection_proxy-10.patch

[2] http://richyen.com/postgres/2019/06/25/pools_arent_just_for_cars.html

[3]

https://www.postgresql.org/message-id/ede4470a-055b-1389-0bbd-840f0594b758%40postgrespro.ru

Thanks,

Ryan Lambert

On Fri, Jul 19, 2019 at 3:10 PM Konstantin Knizhnik <

k(dot)knizhnik(at)postgrespro(dot)ru> wrote:

>

>

> On 19.07.2019 6:36, Ryan Lambert wrote:

>

> Here's what I found tonight in your latest patch (9). The output from git

> apply is better, fewer whitespace errors, but still getting the following.

> Ubuntu 18.04 if that helps.

>

> git apply -p1 < builtin_connection_proxy-9.patch

> <stdin>:79: tab in indent.

> Each proxy launches its own subset of backends.

> <stdin>:634: indent with spaces.

> union {

> <stdin>:635: indent with spaces.

> struct sockaddr_in inaddr;

> <stdin>:636: indent with spaces.

> struct sockaddr addr;

> <stdin>:637: indent with spaces.

> } a;

> warning: squelched 54 whitespace errors

> warning: 59 lines add whitespace errors.

>

>

> A few more minor edits. In config.sgml:

>

> "If the <varname>max_sessions</varname> limit is reached new connection

> are not accepted."

> Should be "connections".

>

> "The default value is 10, so up to 10 backends will server each database,"

> "sever" should be "serve" and the sentence should end with a period

> instead of a comma.

>

>

> In postmaster.c:

>

> /* The socket number we are listening for poolled connections on */

> "poolled" --> "pooled"

>

>

> "(errmsg("could not create listen socket for locahost")));"

>

> "locahost" -> "localhost".

>

>

> " * so to support order balancing we should do dome smart work here."

>

> "dome" should be "some"?

>

> I don't see any tests covering this new functionality. It seems that this

> is significant enough functionality to warrant some sort of tests, but I

> don't know exactly what those would/should be.

>

>

> Thank you once again for this fixes.

> Updated patch is attached.

>

> Concerning testing: I do not think that connection pooler needs some kind

> of special tests.

> The idea of built-in connection pooler is that it should be able to handle

> all requests normal postgres can do.

> I have added to regression tests extra path with enabled connection

> proxies.

> Unfortunately, pg_regress is altering some session variables, so it

> backend becomes tainted and so

> pooling is not actually used (but communication through proxy is tested).

>

> It is also possible to run pg_bench with different number of connections

> though connection pooler.

>

>

> Thanks,

> Ryan

>

>

>

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 25.3 KB |

|

image/png | 25.6 KB |

| pg_conn_pool_notes_3.md | application/octet-stream | 27.7 KB |

In response to

- Re: Built-in connection pooler at 2019-07-19 21:10:34 from Konstantin Knizhnik

Responses

- Re: Built-in connection pooler at 2019-07-25 12:00:30 from Konstantin Knizhnik

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Peter Geoghegan | 2019-07-24 22:06:13 | Re: [HACKERS] [WIP] Effective storage of duplicates in B-tree index. |

| Previous Message | Tomas Vondra | 2019-07-24 21:52:28 | Re: Memory Accounting |