Re: slab allocator performance issues

| From: | David Rowley <dgrowleyml(at)gmail(dot)com> |

|---|---|

| To: | John Naylor <john(dot)naylor(at)enterprisedb(dot)com> |

| Cc: | Tomas Vondra <tomas(dot)vondra(at)enterprisedb(dot)com>, Andres Freund <andres(at)anarazel(dot)de>, pgsql-hackers(at)postgresql(dot)org, Tomas Vondra <tv(at)fuzzy(dot)cz> |

| Subject: | Re: slab allocator performance issues |

| Date: | 2022-12-10 04:01:56 |

| Message-ID: | CAApHDvr0pKjrGVKFg2VqSUjbB1QxsKobu0PhD11qkSO_caL3dg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

.On Mon, 5 Dec 2022 at 23:18, John Naylor <john(dot)naylor(at)enterprisedb(dot)com> wrote:

>

>

> On Mon, Dec 5, 2022 at 3:02 PM David Rowley <dgrowleyml(at)gmail(dot)com> wrote:

> > Going by [2], the instructions are very different with each method, so

> > other machines with different latencies on those instructions might

> > show something different. I attached what I used to test if anyone

> > else wants a go.

>

> I get about 0.1% difference on my machine. Both ways boil down to (on gcc) 3 instructions with low latency. The later ones need the prior results to execute, which I think is what the XXX comment "isn't great" was referring to. The new coding is more mysterious (does it do the right thing on all platforms?), so I guess the original is still the way to go unless we get a better idea.

I don't think it would work well on a one's complement machine, but I

don't think we support those going by the comments above RIGHTMOST_ONE

in bitmapset.c. In anycase, I found that it wasn't any faster than

what Andres wrote. In fact, even changing the code there to "index =

(freecount > 0);" seems to do very little to increase performance. I

do see that having 3 freelist items performs a decent amount better

than having 9. However, which workload is run may alter the result of

that, assuming that keeping new allocations on fuller blocks is a

winning strategy for the CPU's caches.

I've now done quite a bit more work on Andres' patch to try and get it

into (hopefully) somewhere close to a committable shape.

I'm fairly happy with what's there now. It does seem to perform much

better than current master, especially so when the workload would have

caused master to continually malloc and free an entire block when

freeing and allocating a single chunk.

I've done some basic benchmarking, mostly using the attached alloc_bench patch.

If I run:

select *, round(slab_result / aset_result * 100 - 100,1)::text || '%'

as slab_slower_by

from (

select

chunk_size,

keep_chunks,

sum(pg_allocate_memory_test(chunk_size, chunk_size * keep_chunks,

1024*1024*1024, 'slab'))::numeric(1000,3) as slab_result,

sum(pg_allocate_memory_test(chunk_size, chunk_size * keep_chunks,

1024*1024*1024, 'aset'))::numeric(1000,3) as aset_result

from

(values(1),(10),(50),(100),(200),(300),(400),(500),(1000),(2000),(3000),(4000),(5000),(10000))

v1(keep_chunks),

(values(64),(128),(256),(512),(1024)) v2(chunk_size)

group by rollup(1,2)

);

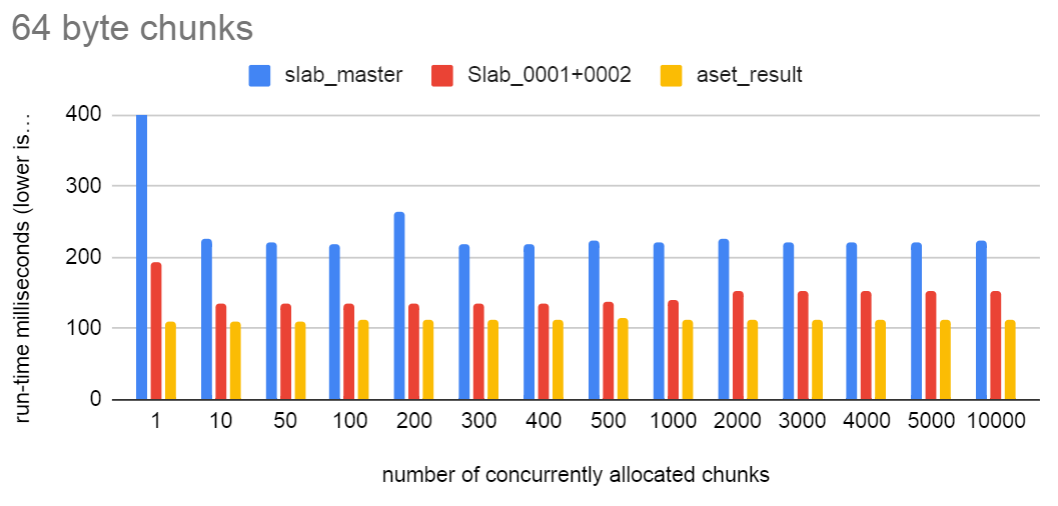

The results for the 64-byte chunk are shown in the attached chart.

It's not as fast as aset, but much faster than master's slab.c

The first blue bar of the chart is well above the vertical axis. It

took master 1118958 milliseconds for that test. The attached patch

took 194 ms. The rest of the tests seem to put the patched code around

somewhere in the middle between the unpatched code and aset.c's

performance.

The benchmark I did was entirely a FIFO workload that keeps around

"keep_chunks" at once before starting to free the oldest chunks. I

know Tomas has some LIFO and random benchmarks. I edited that code [1]

a little to add support for other context types so that a comparison

could be done more easily, however, I'm getting very weird performance

results where sometimes it runs about twice as fast (Tomas mentioned

he got this too). I'm not seeing that with my own benchmarking

function, so I'm wondering if there's something weird going on with

the benchmark itself rather than the slab.c code.

I've likely made much more changes than I can list here, but here are

a few of the more major ones:

1. Make use of dclist for empty blocks

2. In SlabAlloc() allocate chunks from the freelist before the unused list.

3. Added output for showing information about empty blocks in the

SlabStats output.

4. Renamed the context freelists to blocklist. I found this was

likely just confusing things with the block-level freelist. In any

case, it seemed weird to have freelist[0] store full blocks. Not much

free there! I renamed to blocklist[].

5. I did a round of changing the order of the fields in SlabBlock.

This seems to affect performance quite a bit. Having the context first

seems to improve performance. Having the blocklist[] node last also

helps.

6. Removed nblocks and headerSize from SlabContext. headerSize is no

longer needed. nblocks was only really used for Asserts and

SlabIsEmpty. I changed the Asserts to use a local count of blocks and

changed SlabIsEmpty to look at the context's mem_allocated.

7. There's now no integer division in any of the alloc and free code.

The only time we divide by fullChunkSize is in the check function.

8. When using a block from the emptyblock list, I changed the code to

not re-init the block. It's now used as it was left previously. This

means no longer having to build the freelist again.

9. Updated all comments to reflect the current state of the code.

Some things I thought about but didn't do:

a. Change the size of SlabContext's chunkSize, fullChunkSize and

blockSize to be uint32 instead of Size. It might be possible to get

SlabContext below 128 bytes with a bit more work.

b. I could have done a bit more experimentation with unlikely() and

likely() to move less frequently accessed code off into a cold area.

For #2 above, I didn't really see much change in performance when I

swapped the order of what we allocate from first. I expected free

chunks would be better as they've been used and are seemingly more

likely to be in some CPU cache than one of the unused chunks. I might

need a different allocation pattern than the one I used to highlight

that fact though.

David

[1] https://github.com/david-rowley/postgres/tree/alloc_bench_contrib

| Attachment | Content-Type | Size |

|---|---|---|

| v4-0001-Improve-the-performance-of-the-slab-memory-alloca.patch | text/plain | 37.3 KB |

| alloc_bench.patch | text/plain | 7.8 KB |

|

image/png | 52.8 KB |

In response to

- Re: slab allocator performance issues at 2022-12-05 10:18:24 from John Naylor

Responses

- Re: slab allocator performance issues at 2022-12-12 07:13:47 from John Naylor

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Michael Paquier | 2022-12-10 04:07:29 | Re: Generate pg_stat_get_* functions with Macros |

| Previous Message | Nathan Bossart | 2022-12-10 03:55:45 | Re: Generate pg_stat_get_* functions with Macros |