Add bump memory context type and use it for tuplesorts

| From: | David Rowley <dgrowleyml(at)gmail(dot)com> |

|---|---|

| To: | PostgreSQL Developers <pgsql-hackers(at)lists(dot)postgresql(dot)org> |

| Subject: | Add bump memory context type and use it for tuplesorts |

| Date: | 2023-06-27 09:19:26 |

| Message-ID: | CAApHDvqGSpCU95TmM=Bp=6xjL_nLys4zdZOpfNyWBk97Xrdj2w@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

Background:

The idea with 40af10b57 (Use Generation memory contexts to store

tuples in sorts) was to reduce the memory wastage in tuplesort.c

caused by aset.c's power-of-2 rounding up behaviour and allow more

tuples to be stored per work_mem in tuplesort.c

Later, in (v16's) c6e0fe1f2a (Improve performance of and reduce

overheads of memory management) that commit reduced the palloc chunk

header overhead down to 8 bytes. For generation.c contexts (as is now

used by non-bounded tuplesorts as of 40af10b57), the overhead was 24

bytes. So this allowed even more tuples to be stored in a work_mem by

reducing the chunk overheads for non-bounded tuplesorts by 2/3rds down

to 16 bytes.

1083f94da (Be smarter about freeing tuples during tuplesorts) removed

the useless pfree() calls from tuplesort.c which pfree'd the tuples

just before we reset the context. So, as of now, we never pfree()

memory allocated to store tuples in non-bounded tuplesorts.

My thoughts are, if we never pfree tuples in tuplesorts, then why

bother having a chunk header at all?

Proposal:

Because of all of what is mentioned above about the current state of

tuplesort, there does not really seem to be much need to have chunk

headers in memory we allocate for tuples at all. Not having these

saves us a further 8 bytes per tuple.

In the attached patch, I've added a bump memory allocator which

allocates chunks without and chunk header. This means the chunks

cannot be pfree'd or realloc'd. That seems fine for the use case for

storing tuples in tuplesort. I've coded bump.c in such a way that when

built with MEMORY_CONTEXT_CHECKING, we *do* have chunk headers. That

should allow us to pick up any bugs that are introduced by any code

which accidentally tries to pfree a bump.c chunk.

I'd expect a bump.c context only to be used for fairly short-lived and

memory that's only used by a small amount of code (e.g. only accessed

from a single .c file, like tuplesort.c). That should reduce the risk

of any code accessing the memory which might be tempted into calling

pfree or some other unsupported function.

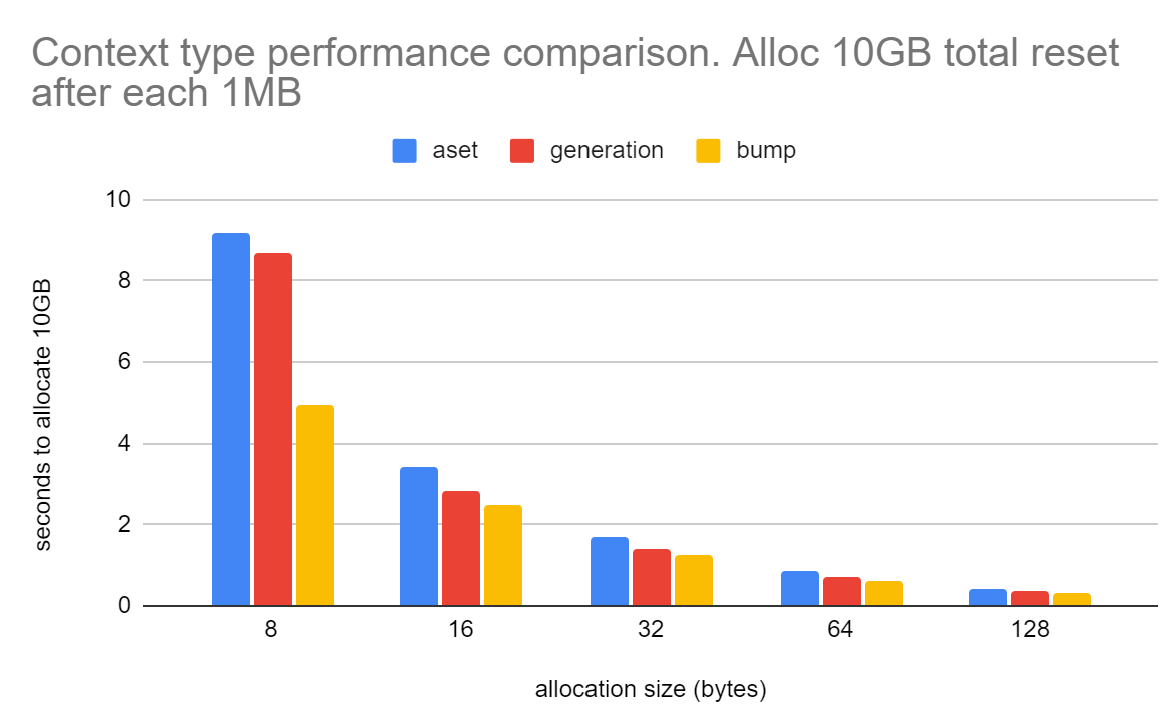

Getting away from the complexity of freelists (aset.c) and tracking

allocations per block (generation.c) allows much better allocation

performance. All we need to do is check there's enough space then

bump the free pointer when performing an allocation. See the attached

time_to_allocate_10gbs_memory.png to see how bump.c compares to aset.c

and generation.c to allocate 10GBs of memory resetting the context

after 1MB. It's not far off twice as fast in raw allocation speed.

(See 3rd tab in the attached spreadsheet)

Performance:

In terms of the speed of palloc(), the performance tested on an AMD

3990x CPU on Linux for 8-byte chunks:

aset.c 9.19 seconds

generation.c 8.68 seconds

bump.c 4.95 seconds

These numbers were generated by calling:

select stype,chksz,pg_allocate_memory_test_reset(chksz,1024*1024,10::bigint*1024*1024*1024,stype)

from (values(8),(16),(32),(64),(128)) t1(chksz) cross join

(values('aset'),('generation'),('bump')) t2(stype) order by

stype,chksz;

This allocates a total of 10GBs of chunks but calls a context reset

after 1MB so as not to let the memory usage get out of hand. The

function is in the attached membench.patch.txt file.

In terms of performance of tuplesort, there's a small (~5-6%)

performance gain. Not as much as I'd hoped, but I'm also doing a bit

of other work on tuplesort to make it more efficient in terms of CPU,

so I suspect the cache efficiency improvements might be more

pronounced after those.

Please see the attached bump_context_tuplesort_2023-06-27.ods for my

complete benchmark.

One thing that might need more thought is that we're running a bit low

on MemoryContextMethodIDs. I had to use an empty slot that has a bit

pattern like glibc malloc'd chunks sized 128kB. Maybe it's worth

freeing up a bit from the block offset in MemoryChunk. This is

currently 30 bits allowing 1GB offset, but these offsets are always

MAXALIGNED, so we could free up a couple of bits since those 2

lowest-order bits will always be 0 anyway.

I've attached the bump allocator patch and also the script I used to

gather the performance results in the first 2 tabs in the attached

spreadsheet.

David

| Attachment | Content-Type | Size |

|---|---|---|

| membench.patch.txt | text/plain | 8.0 KB |

|

image/png | 52.2 KB |

| bump_context_tuplesort_2023-06-27.ods | application/vnd.oasis.opendocument.spreadsheet | 110.8 KB |

| bump_allocator.patch | text/plain | 35.3 KB |

| sortbenchall.sh.txt | text/plain | 2.3 KB |

Responses

- Re: Add bump memory context type and use it for tuplesorts at 2023-07-10 23:51:11 from David Rowley

- Re: Add bump memory context type and use it for tuplesorts at 2023-07-26 00:11:49 from Nathan Bossart

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | torikoshia | 2023-06-27 10:03:21 | Re: RFC: Logging plan of the running query |

| Previous Message | Yuya Watari | 2023-06-27 09:11:14 | Re: Making empty Bitmapsets always be NULL |