Re: Parallel Seq Scan vs kernel read ahead

| From: | David Rowley <dgrowleyml(at)gmail(dot)com> |

|---|---|

| To: | Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Thomas Munro <thomas(dot)munro(at)gmail(dot)com>, Ranier Vilela <ranier(dot)vf(at)gmail(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: Parallel Seq Scan vs kernel read ahead |

| Date: | 2020-06-11 01:47:49 |

| Message-ID: | CAApHDvopPkA+q5y_k_6CUV4U6DPhmz771VeUMuzLs3D3mWYMOg@mail.gmail.com |

| Views: | Whole Thread | Raw Message | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

On Thu, 11 Jun 2020 at 01:24, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> wrote:

> Can we try the same test with 4, 8, 16 workers as well? I don't

> foresee any problem with a higher number of workers but it might be

> better to once check that if it is not too much additional work.

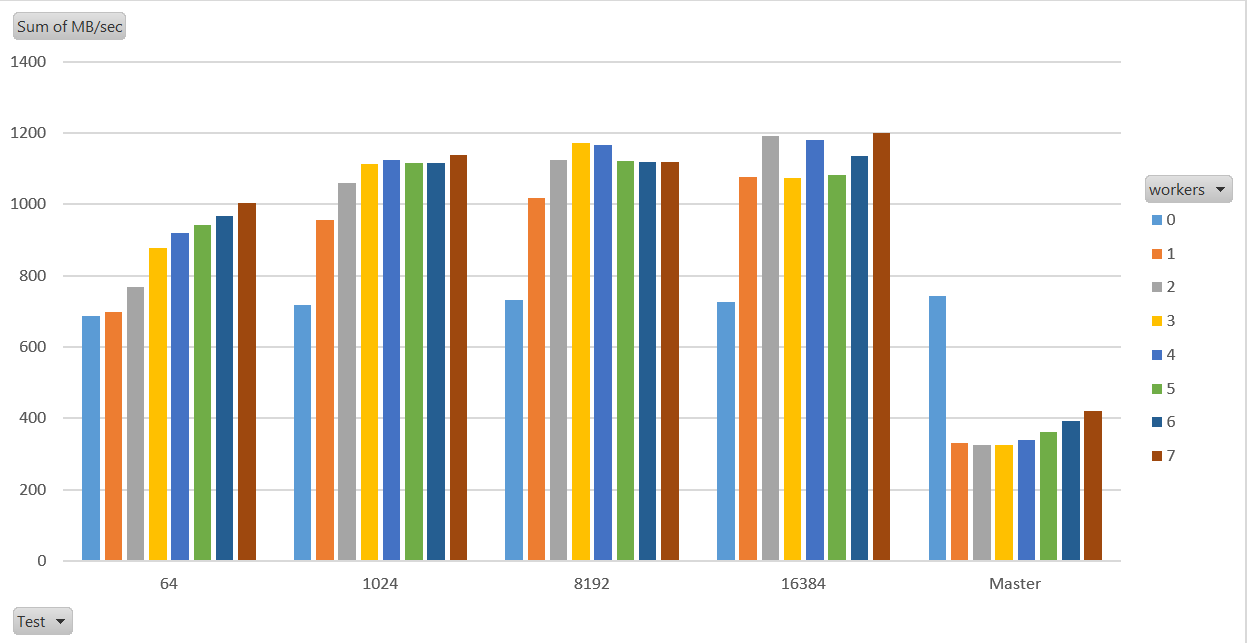

I ran the tests again with up to 7 workers. The CPU here only has 8

cores (a laptop), so I'm not sure if there's much sense in going

higher than that?

CPU = Intel i7-8565U. 16GB RAM.

Note that I did the power2 ramp-up with each of the patched tests this

time. Thomas' version ramps up 1 pages at a time, which is ok when

only ramping up to 64 pages, but not for these higher numbers I'm

testing with. (Patch attached)

Results attached in a graph format, or in text below:

Master:

workers=0: Time: 141175.935 ms (02:21.176) (742.7MB/sec)

workers=1: Time: 316854.538 ms (05:16.855) (330.9MB/sec)

workers=2: Time: 323471.791 ms (05:23.472) (324.2MB/sec)

workers=3: Time: 321637.945 ms (05:21.638) (326MB/sec)

workers=4: Time: 308689.599 ms (05:08.690) (339.7MB/sec)

workers=5: Time: 289014.709 ms (04:49.015) (362.8MB/sec)

workers=6: Time: 267785.27 ms (04:27.785) (391.6MB/sec)

workers=7: Time: 248735.817 ms (04:08.736) (421.6MB/sec)

Patched 64: (power 2 ramp-up)

workers=0: Time: 152752.558 ms (02:32.753) (686.5MB/sec)

workers=1: Time: 149940.841 ms (02:29.941) (699.3MB/sec)

workers=2: Time: 136534.043 ms (02:16.534) (768MB/sec)

workers=3: Time: 119387.248 ms (01:59.387) (878.3MB/sec)

workers=4: Time: 114080.131 ms (01:54.080) (919.2MB/sec)

workers=5: Time: 111472.144 ms (01:51.472) (940.7MB/sec)

workers=6: Time: 108290.608 ms (01:48.291) (968.3MB/sec)

workers=7: Time: 104349.947 ms (01:44.350) (1004.9MB/sec)

Patched 1024: (power 2 ramp-up)

workers=0: Time: 146106.086 ms (02:26.106) (717.7MB/sec)

workers=1: Time: 109832.773 ms (01:49.833) (954.7MB/sec)

workers=2: Time: 98921.515 ms (01:38.922) (1060MB/sec)

workers=3: Time: 94259.243 ms (01:34.259) (1112.4MB/sec)

workers=4: Time: 93275.637 ms (01:33.276) (1124.2MB/sec)

workers=5: Time: 93921.452 ms (01:33.921) (1116.4MB/sec)

workers=6: Time: 93988.386 ms (01:33.988) (1115.6MB/sec)

workers=7: Time: 92096.414 ms (01:32.096) (1138.6MB/sec)

Patched 8192: (power 2 ramp-up)

workers=0: Time: 143367.057 ms (02:23.367) (731.4MB/sec)

workers=1: Time: 103138.918 ms (01:43.139) (1016.7MB/sec)

workers=2: Time: 93368.573 ms (01:33.369) (1123.1MB/sec)

workers=3: Time: 89464.529 ms (01:29.465) (1172.1MB/sec)

workers=4: Time: 89921.393 ms (01:29.921) (1166.1MB/sec)

workers=5: Time: 93575.401 ms (01:33.575) (1120.6MB/sec)

workers=6: Time: 93636.584 ms (01:33.637) (1119.8MB/sec)

workers=7: Time: 93682.21 ms (01:33.682) (1119.3MB/sec)

Patched 16384 (power 2 ramp-up)

workers=0: Time: 144598.502 ms (02:24.599) (725.2MB/sec)

workers=1: Time: 97344.16 ms (01:37.344) (1077.2MB/sec)

workers=2: Time: 88025.42 ms (01:28.025) (1191.2MB/sec)

workers=3: Time: 97711.521 ms (01:37.712) (1073.1MB/sec)

workers=4: Time: 88877.913 ms (01:28.878) (1179.8MB/sec)

workers=5: Time: 96985.978 ms (01:36.986) (1081.2MB/sec)

workers=6: Time: 92368.543 ms (01:32.369) (1135.2MB/sec)

workers=7: Time: 87498.156 ms (01:27.498) (1198.4MB/sec)

David

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 17.6 KB |

| parallel_step_size.patch | application/octet-stream | 6.8 KB |

In response to

- Re: Parallel Seq Scan vs kernel read ahead at 2020-06-10 13:24:23 from Amit Kapila

Responses

- Re: Parallel Seq Scan vs kernel read ahead at 2020-06-11 02:08:53 from Amit Kapila

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Amit Kapila | 2020-06-11 02:08:53 | Re: Parallel Seq Scan vs kernel read ahead |

| Previous Message | Jeff Davis | 2020-06-11 01:15:39 | Re: hashagg slowdown due to spill changes |