Re: Hybrid Hash/Nested Loop joins and caching results from subplans

| From: | David Rowley <dgrowleyml(at)gmail(dot)com> |

|---|---|

| To: | Andy Fan <zhihui(dot)fan1213(at)gmail(dot)com> |

| Cc: | Simon Riggs <simon(at)2ndquadrant(dot)com>, PostgreSQL Developers <pgsql-hackers(at)lists(dot)postgresql(dot)org> |

| Subject: | Re: Hybrid Hash/Nested Loop joins and caching results from subplans |

| Date: | 2020-07-07 12:32:22 |

| Message-ID: | CAApHDvoGUsdPORSND25Zumk9e81b=Em22LdyiunB1Cgi8L0_bA@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

On Thu, 2 Jul 2020 at 22:57, David Rowley <dgrowleyml(at)gmail(dot)com> wrote:

> I've attached the v2 patch series.

There was a bug in v2 that caused the caching not to work properly

when a unique join skipped to the next outer row after finding the

first match. The cache was not correctly marked as complete in that

case. Normally we only mark the cache entry complete when we read the

scan to completion. Unique joins is a special case where we can mark

it as complete early.

I've also made a few more changes to reduce the size of the

ResultCacheEntry struct, taking it from 40 bytes down to 24. That

matters quite a bit when the cached tuple is very narrow. One of the

tests in resultcache.out, because we can now fit more entries in the

cache, it reports a 15% increase in cache hits.

I also improved the costing regarding the estimate of how many cache

entries we could fit in work mem. Previously I was not accounting for

the size of the cache data structures in memory. v2 only accounted for

the tuples themselves. It's important to count these as if we don't

then it could cause the costing to think we could fit more entries

than we actually could which meant the estimated number of cache

evictions was off and could result in preferring a result cache plan

when we perhaps shouldn't.

I've attached v4.

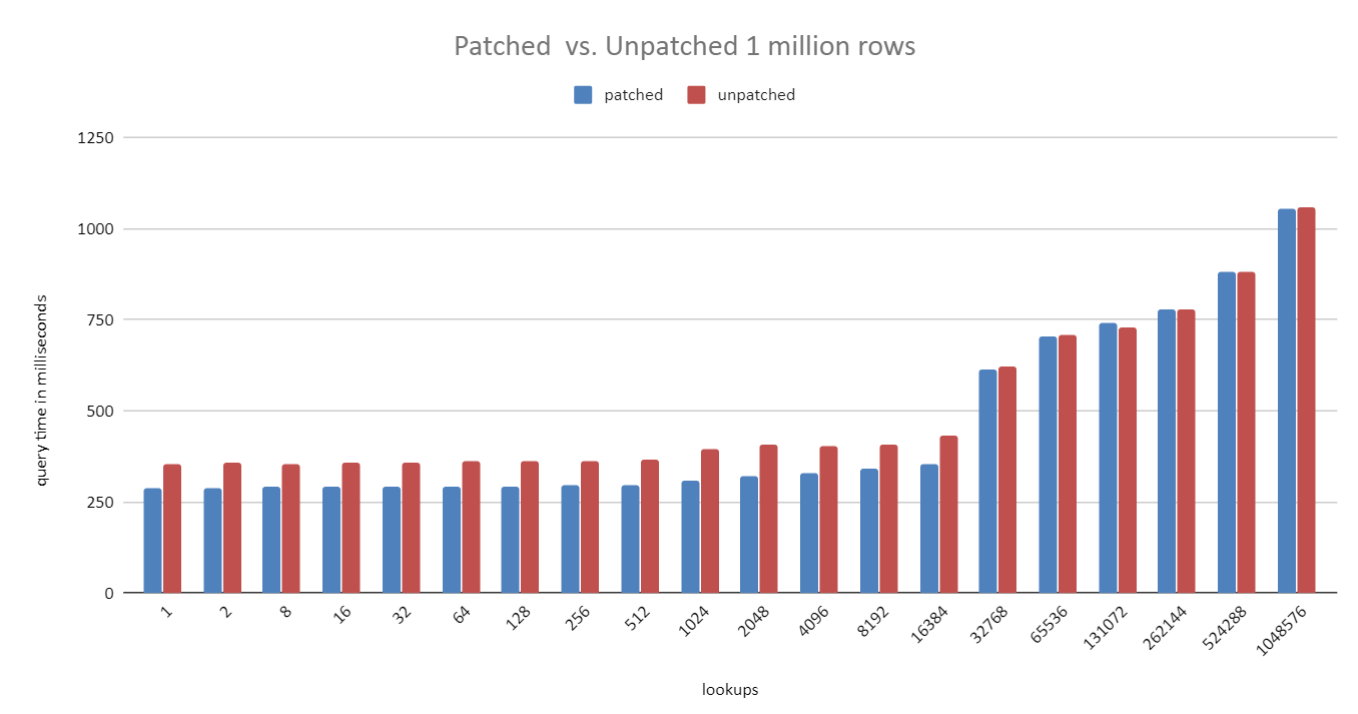

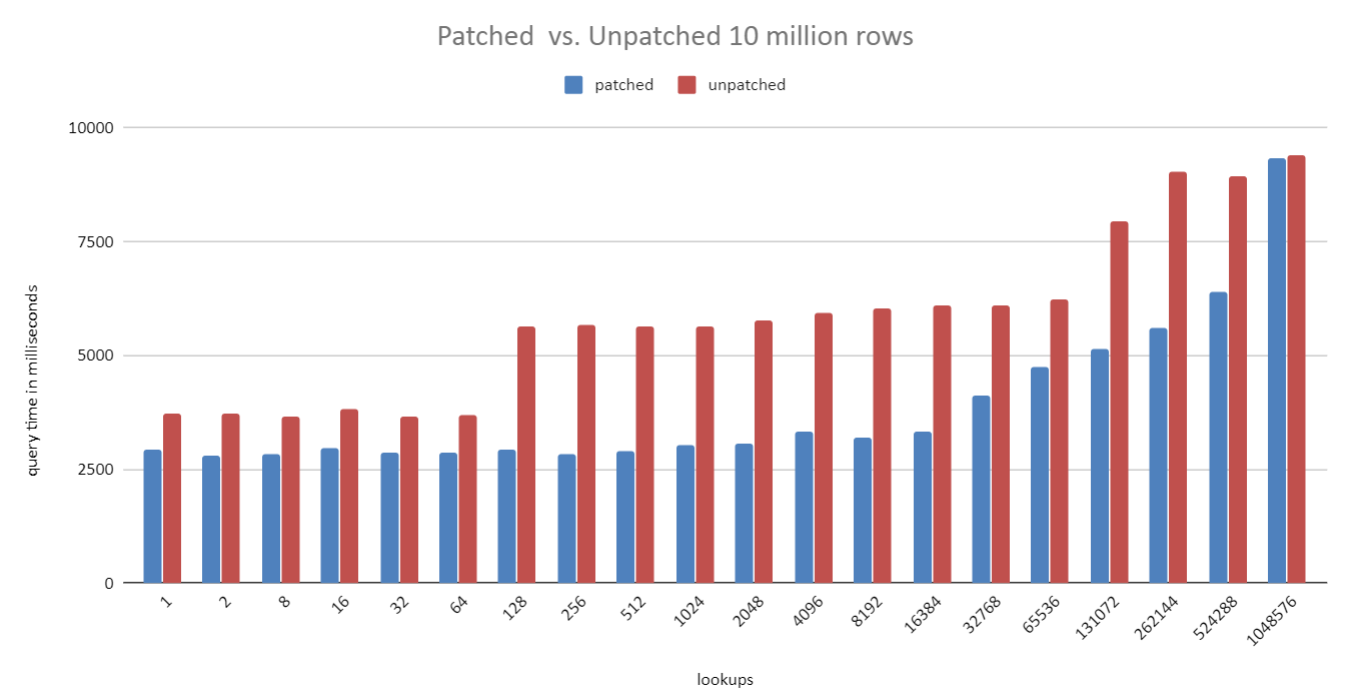

I've also attached a bunch of benchmark results which were based on v3

of the patch. I didn't send out v3, but the results of v4 should be

almost the same for this test. The script to run the benchmark is

contained in the resultcachebench.txt file. The benchmark just mocks

up a "parts" table and a "sales" table. The parts table has 1 million

rows in the 1 million test, as does the sales table. This goes up to

10 million and 100 million in the other two tests. What varies with

each bar in the chart is the number of distinct parts in the sales

table. I just started with 1 part then doubled that up to ~1 million.

The unpatched version always uses a Hash Join, which is wasteful since

only a subset of parts are looked up. In the 1 million test the

planner switches to using a Hash Join in the patched version at 65k

parts. It waits until the 1 million distinct parts test to switch

over in the 10 million and 100 million test. The hash join costs are

higher in that case due to multi-batching, which is why the crossover

point is higher on the larger scale tests. I used 256MB work_mem for

all tests. Looking closely at the 10 million test, you can see that

the hash join starts taking longer from 128 parts onward. The hash

table is the same each time here, so I can only suspect that the

slowdown between 64 and 128 parts is due to CPU cache thrashing when

getting the correct buckets from the overly large hash table. This is

not really visible in the patched version as the resultcache hash

table is much smaller.

David

| Attachment | Content-Type | Size |

|---|---|---|

| resultcachebench.txt | text/plain | 1.8 KB |

| v4-0002-Allow-users-of-simplehash.h-to-perform-direct-del.patch | application/octet-stream | 4.7 KB |

| v4-0001-Allow-estimate_num_groups-to-pass-back-further-de.patch | application/octet-stream | 8.8 KB |

| v4-0003-Add-Result-Cache-executor-node.patch | application/octet-stream | 155.9 KB |

|

image/png | 58.6 KB |

|

image/png | 61.3 KB |

|

image/png | 62.3 KB |

In response to

- Re: Hybrid Hash/Nested Loop joins and caching results from subplans at 2020-07-02 10:57:44 from David Rowley

Responses

- Re: Hybrid Hash/Nested Loop joins and caching results from subplans at 2020-07-08 03:37:09 from David Rowley

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | David Rowley | 2020-07-07 12:54:44 | Re: Default setting for enable_hashagg_disk (hash_mem) |

| Previous Message | mailajaypatel | 2020-07-07 11:41:15 | Re: Question: PostgreSQL on Amazon linux EC2 |