Re: TODO : Allow parallel cores to be used by vacuumdb [ WIP ]

| From: | Gregory Smith <gregsmithpgsql(at)gmail(dot)com> |

|---|---|

| To: | Gavin Flower <GavinFlower(at)archidevsys(dot)co(dot)nz>, Alvaro Herrera <alvherre(at)2ndquadrant(dot)com>, Amit Kapila <amit(dot)kapila16(at)gmail(dot)com> |

| Cc: | Dilip kumar <dilip(dot)kumar(at)huawei(dot)com>, Magnus Hagander <magnus(at)hagander(dot)net>, Jan Lentfer <Jan(dot)Lentfer(at)web(dot)de>, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us>, PostgreSQL-development <pgsql-hackers(at)postgresql(dot)org>, Sawada Masahiko <sawada(dot)mshk(at)gmail(dot)com>, Euler Taveira <euler(at)timbira(dot)com(dot)br> |

| Subject: | Re: TODO : Allow parallel cores to be used by vacuumdb [ WIP ] |

| Date: | 2014-09-26 23:36:53 |

| Message-ID: | 5425F895.8060704@gmail.com |

| Views: | Whole Thread | Raw Message | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

On 9/26/14, 2:38 PM, Gavin Flower wrote:

> Curious: would it be both feasible and useful to have multiple workers

> process a 'large' table, without complicating things too much? The

> could each start at a different position in the file.

Not really feasible without a major overhaul. It might be mildly useful

in one rare case. Occasionally I'll find very hot single tables that

vacuum is constantly processing, despite mostly living in RAM because

the server has a lot of memory. You can set vacuum_cost_page_hit=0 in

order to get vacuum to chug through such a table as fast as possible.

However, the speed at which that happens will often then be limited by

how fast a single core can read from memory, for things in

shared_buffers. That is limited by the speed of memory transfers from a

single NUMA memory bank. Which bank you get will vary depending on the

core that owns that part of shared_buffers' memory, but it's only one at

a time.

On large servers, that can be only a small fraction of the total memory

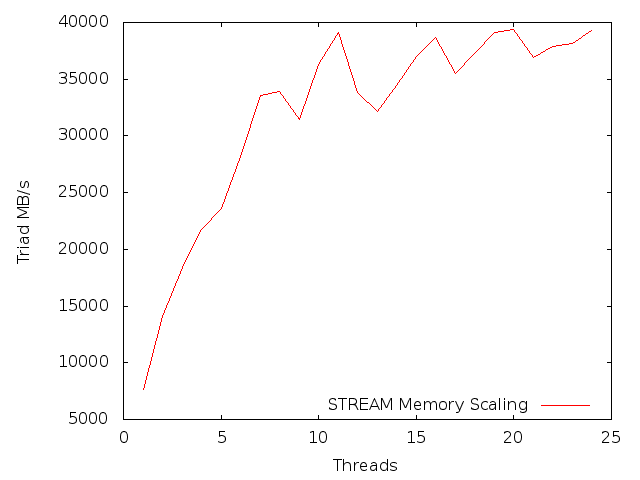

bandwidth the server is able to reach. I've attached a graph showing

how this works on a system with many NUMA banks of RAM, and this is only

a medium sized system. This server can hit 40GB/s of memory transfers

in total; no one process will ever see more than 8GB/s.

If we had more vacuum processes running against the same table, there

would then be more situations where they were doing work against

different NUMA memory banks at the same time, therefore making faster

progress through the hits in shared_buffers possible. In the real world,

this situation is rare enough compared to disk-bound vacuum work that I

doubt it's worth getting excited over. Systems with lots of RAM where

performance is regularly dominated by one big ugly table are common

though, so I wouldn't just rule the idea out as not useful either.

--

Greg Smith greg(dot)smith(at)crunchydatasolutions(dot)com

Chief PostgreSQL Evangelist - http://crunchydatasolutions.com/

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 5.8 KB |

In response to

- Re: TODO : Allow parallel cores to be used by vacuumdb [ WIP ] at 2014-09-26 18:38:25 from Gavin Flower

Responses

- Re: TODO : Allow parallel cores to be used by vacuumdb [ WIP ] at 2014-09-27 04:55:22 from Gavin Flower

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Josh Berkus | 2014-09-27 01:20:14 | Re: jsonb format is pessimal for toast compression |

| Previous Message | Peter Geoghegan | 2014-09-26 23:19:33 | Re: INSERT ... ON CONFLICT {UPDATE | IGNORE} |