Re: what to revert

| From: | Tomas Vondra <tomas(dot)vondra(at)2ndquadrant(dot)com> |

|---|---|

| To: | Kevin Grittner <kgrittn(at)gmail(dot)com> |

| Cc: | Andres Freund <andres(at)anarazel(dot)de>, "pgsql-hackers(at)postgresql(dot)org" <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: what to revert |

| Date: | 2016-05-31 16:38:53 |

| Message-ID: | 067acb3d-80eb-279d-fce0-90e0a36c6aa2@2ndquadrant.com |

| Views: | Whole Thread | Raw Message | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

Hi,

On 05/15/2016 10:06 PM, Tomas Vondra wrote:

...

> What I plan to do next, over the next week:

>

> 1) Wait for the second run of "immediate" to complete (should happen in

> a few hours)

>

> 2) Do tests with other workloads (mostly read-only, read-write).

I've finally had time to look at results from the additional read-write

runs. If needed, the whole archive (old_snapshot3.tgz) is available in

the same google drive folder as the previous results:

https://drive.google.com/open?id=0BzigUP2Fn0XbR1dyOFA3dUxRaUU

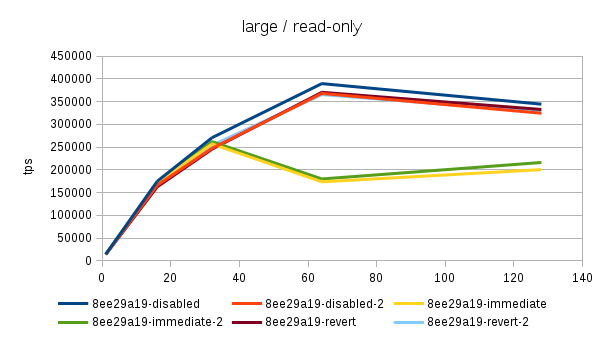

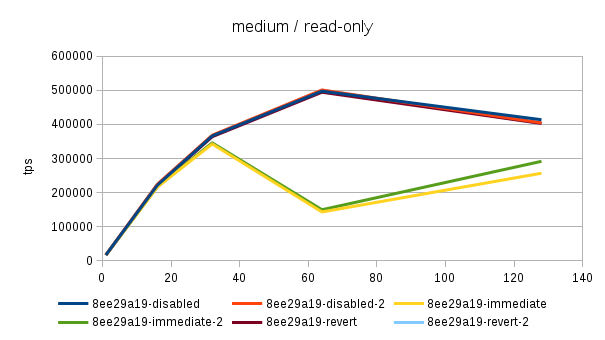

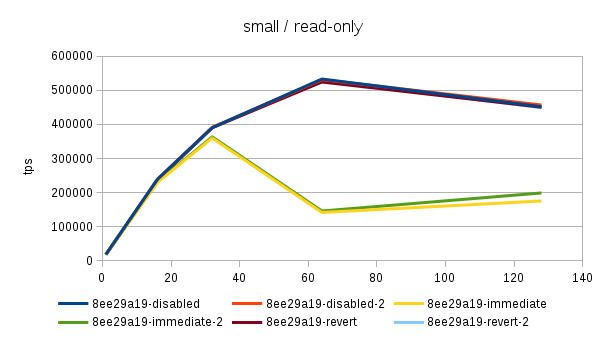

I've already analyzed the read-only results in the previous message on

15/5 and the additional "immediate" run was consistent with that, so I

have nothing to add at this point.

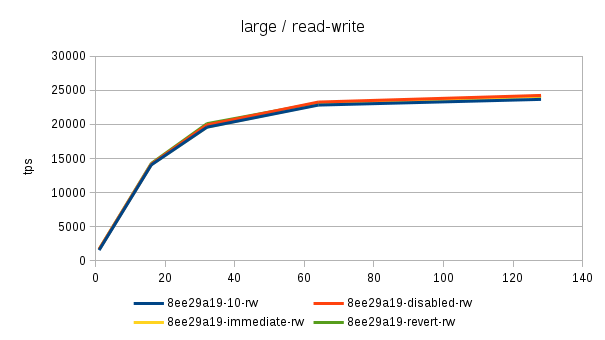

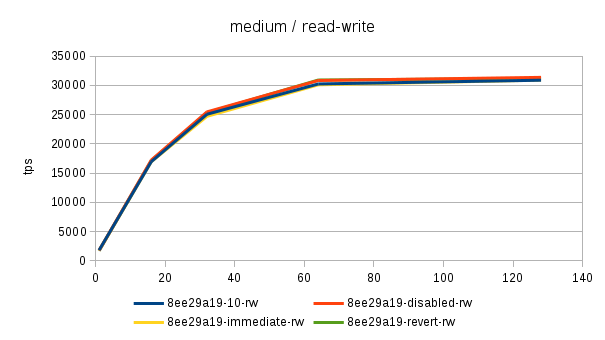

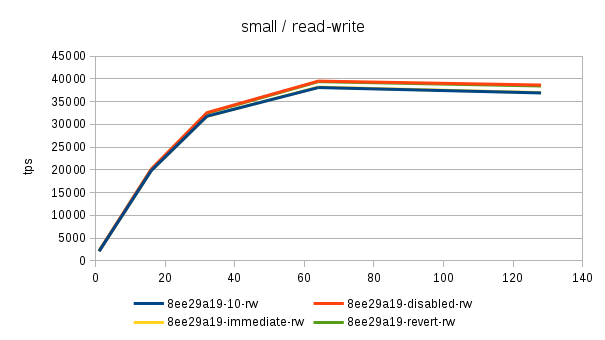

The read-write results (just the usual pgbench workload) suggest there's

not much difference - see the attached charts, where the data sets

almost merge into a single line.

The only exception seems to be the "small" data set where the

reverted/disabled cases seem to perform ~3-4% better than the

immediate/10 cases. But as Noah pointed out, this may just as well be

random noise or more likely due to binary layout differences.

What I still find a tiny bit annoying, though, is the volatility of the

read-only results with many clients. The last two sheets in the attached

spreadsheet measure this in two slightly different ways:

1: (MAX(x) - MIN(x)) / MAX(x)

2: STDDEV(x) / AVG(x)

The (1) formula is much more sensitive to outliers, of course, but in

general the conclusions are the same - there's a pretty clear increase

with the "immediate" setting on read-only.

Kevin mentioned possible kernel-related bugs - that may be the case, but

I'm not sure why that'd only affect this one case (and not for example

reverted case).

I'm however inclined to take this as a proof that running CPU-intensive

workloads 128 active clients on 32 physical cores is a bad idea. On

fewer client counts no such problems happen.

That being said, I'm done with benchmarking this feature, at least for

now - I'm personally convinced the performance impact is within noise. I

may run additional tests on request in the future, but probably not in

the next ~ month or so as the hw is busy with other stuff.

regards

--

Tomas Vondra http://www.2ndQuadrant.com

PostgreSQL Development, 24x7 Support, Remote DBA, Training & Services

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 24.9 KB |

|

image/png | 16.8 KB |

|

image/png | 22.6 KB |

|

image/png | 18.3 KB |

|

image/png | 22.3 KB |

|

image/png | 18.8 KB |

| old-snap3.ods | application/vnd.oasis.opendocument.spreadsheet | 181.8 KB |

In response to

- Re: what to revert at 2016-05-15 20:06:28 from Tomas Vondra

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Robert Haas | 2016-05-31 16:40:03 | Re: copyParamList |

| Previous Message | Peter Eisentraut | 2016-05-31 16:36:08 | Re: parallel.c is not marked as test covered |