Re: [PERFORM] Slow query: bitmap scan troubles

| From: | Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

|---|---|

| To: | Simon Riggs <simon(at)2ndQuadrant(dot)com> |

| Cc: | Jeff Janes <jeff(dot)janes(at)gmail(dot)com>, pgsql-hackers <pgsql-hackers(at)postgresql(dot)org> |

| Subject: | Re: [PERFORM] Slow query: bitmap scan troubles |

| Date: | 2013-01-11 01:07:34 |

| Message-ID: | 13967.1357866454@sss.pgh.pa.us |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers pgsql-performance |

I wrote:

> I'm fairly happy with the general shape of this formula: it has a

> principled explanation and the resulting numbers appear to be sane.

> The specific cost multipliers obviously are open to improvement based

> on future evidence. (In particular, I intend to code it in a way that

> doesn't tie the "startup overhead" and "cost per page" numbers to be

> equal, even though I'm setting them equal for the moment for lack of a

> better idea.)

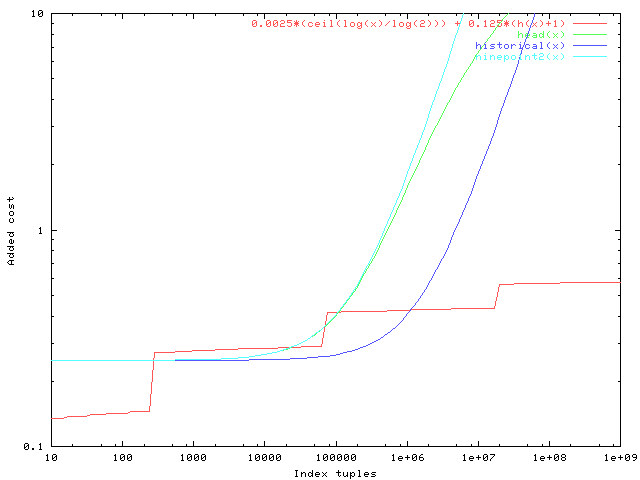

I realized that there was a rather serious error in the graphs I showed

before: they were computing the old cost models as #tuples/10000 or

#tuples/100000, but really it's #pages. So naturally that moves those

curves down quite a lot. After some playing around I concluded that the

best way to avoid any major increases in the attributed cost is to drop

the constant "costs of indexscan setup" charge that I proposed before.

(That was a little weird anyway since we don't model any similar cost

for any other sort of executor setup.) The attached graph shows the

corrected old cost curves and the proposed new one.

> One issue that needs some thought is that the argument for this formula

> is based entirely on thinking about b-trees. I think it's probably

> reasonable to apply it to gist, gin, and sp-gist as well, assuming we

> can get some estimate of tree height for those, but it's obviously

> hogwash for hash indexes. We could possibly just take H=0 for hash,

> and still apply the log2(N) part ... not so much because that is right

> as because it's likely too small to matter.

In the attached patch, I use the proposed formula for btree, gist, and

spgist indexes. For btree we read out the actual tree height from the

metapage and use that. For gist and spgist there's not a uniquely

determinable tree height, but I propose taking log100(#pages) as a

first-order estimate. For hash, I think we actually don't need any

corrections, for the reasons set out in the comment added to

hashcostestimate. I left the estimate for GIN alone; I've not studied

it enough to know whether it ought to be fooled with, but in any case it

behaves very little like btree.

A big chunk of the patch diff comes from redesigning the API of

genericcostestimate so that it can cheaply pass back some additional

values, so we don't have to recompute those values at the callers.

Other than that and the new code to let btree report out its tree

height, this isn't a large patch. It basically gets rid of the two

ad-hoc calculations in genericcostestimate() and inserts substitute

calculations in the per-index-type functions.

I've verified that this patch results in no changes in the regression

tests. It's worth noting though that there is now a small nonzero

startup-cost charge for indexscans, for example:

regression=# explain select * from tenk1 where unique1 = 42;

QUERY PLAN

-----------------------------------------------------------------------------

Index Scan using tenk1_unique1 on tenk1 (cost=0.29..8.30 rows=1 width=244)

Index Cond: (unique1 = 42)

(2 rows)

where in 9.2 the cost estimate was 0.00..8.28. I personally think this

is a good idea, but we'll have to keep our eyes open to see if it

changes any plans in ways we don't like.

This is of course much too large a change to consider back-patching.

What I now recommend we do about 9.2 is just revert it to the historical

fudge factor (#pages/100000).

Comments?

regards, tom lane

| Attachment | Content-Type | Size |

|---|---|---|

|

image/png | 4.2 KB |

| unknown_filename | text/plain | 497 bytes |

| index-access-costs-1.patch.gz | application/octet-stream | 8.5 KB |

In response to

- Re: [PERFORM] Slow query: bitmap scan troubles at 2013-01-07 18:27:38 from Tom Lane

Responses

- Re: [PERFORM] Slow query: bitmap scan troubles at 2013-01-14 16:45:01 from Robert Haas

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Tom Lane | 2013-01-11 01:12:56 | Re: PL/perl should fail on configure, not make |

| Previous Message | Josh Berkus | 2013-01-11 00:53:20 | Re: PL/perl should fail on configure, not make |

Browse pgsql-performance by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Andrzej Zawadzki | 2013-01-11 08:13:37 | Re: Slow query after upgrade from 9.0 to 9.2 |

| Previous Message | Charles Gomes | 2013-01-10 19:57:47 | Re: Partition insert trigger using C language |